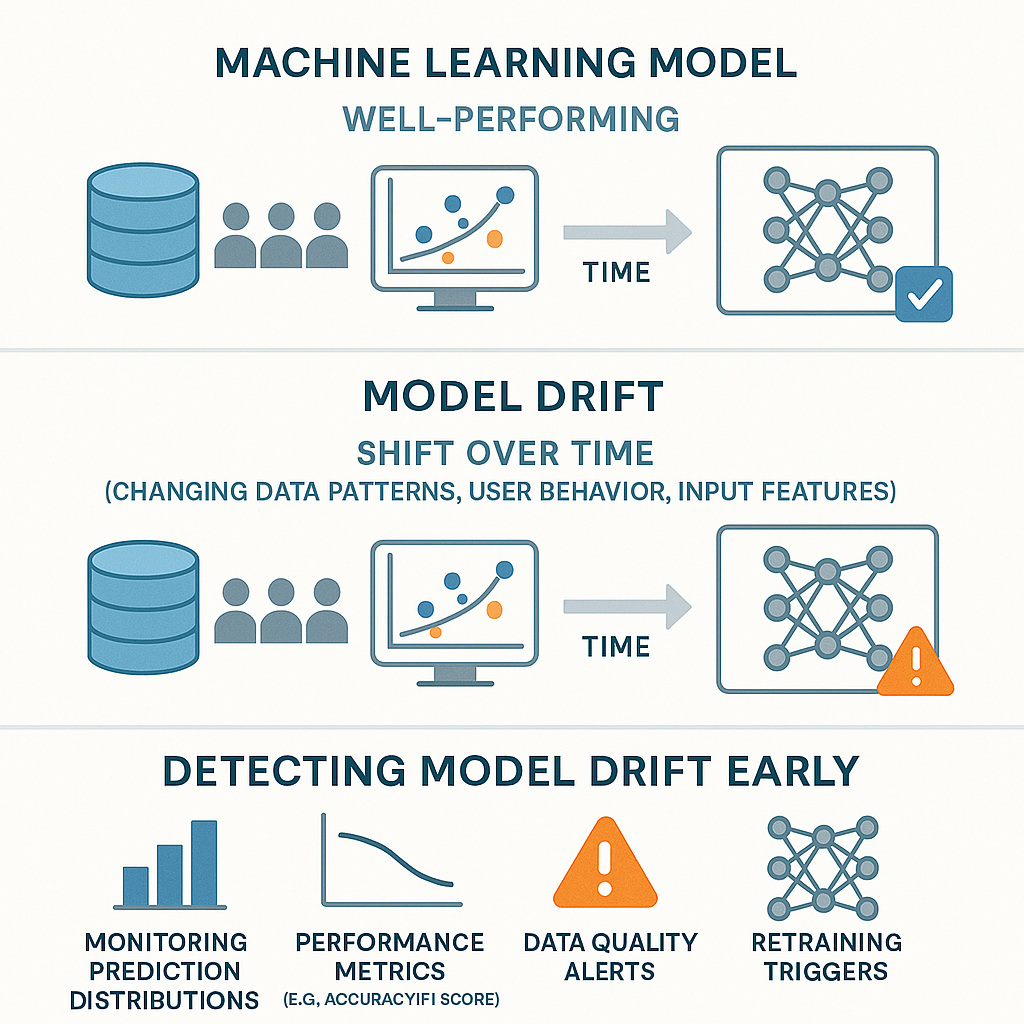

In machine learning, experts create models to learn from data and make clever choices. But what if the data begins to shift over time? That’s when problems can crop up — often due to something known as model drift.

Let’s explore what model drift means why it’s important, and how you can spot it before it turns into a big issue.

What Is Model Drift ?

Model drift occurs when a machine learning model that used to make correct predictions starts to slip up. The reason? The data it’s now encountering doesn’t match the data it trained on anymore.

Picture this: you’re teaching a model to forecast online shopping trends based on last year’s habits — but now shoppers are browsing , and the model can’t keep up. This change is what we call drift.

There are two main types:

- Data Drift: When the data inputs change. For example, if the customer base shifts.

- Concept Drift: When the link between input and output changes. For instance how people view “popular” products might change over time.

Why Does Model Drift Happen?

Drift is common — and often can’t be avoided. Here’s why it occurs:

- Changing user behavior (people’s habits change)

- Seasonal patterns (like Christmas shopping or flu outbreaks)

- New rules or laws (affecting financial or healthcare models)

- Market changes or world events (such as pandemics or rising prices)

- Tech updates (like shifts in data collection methods)

In other words, the world moves on — and your model needs to stay current.

How Model Drift Affects Your Business

Neglecting model drift can lead to serious problems:

- Your model could give wrong results.

- Customers might stop trusting your product.

- You could overlook chances or make expensive choices.

- In fields like finance or healthcare, it might even lead to legal risks.

Keeping models accurate isn’t just a tech problem — it’s key for business.

Ways to Spot Model Drift

The quicker you notice drift the easier it is to fix. Here are some clever ways to stay on top of it:

1. Check Model Performance

Often look at how well your model is doing. If measures like accuracy, precision, or F1-score start to fall, something might be wrong.

2. Watch Data Changes

Look at new data coming into the model and compare it with the training data. Are the values or patterns changing? Some tools can help you keep track of these changes.

3. Run Shadow Models

Set up a newer model to work alongside the current one without affecting users. This allows you to compare results and check if you need to update.

4. Create Alerts

You can set limits that trigger warnings if performance falls too much. This makes sure you catch problems right away — not weeks down the line.

5. Update Your Model Often

Don’t put off retraining. Use new data to keep your model current and in line with present conditions.

Tools That Help Spot Drift

A number of tools and platforms offer features to detect drift:

- Evidently AI – Tracks data and performance drift.

- Seldon Alibi Detect – Open-source library that monitors ML models.

- AWS SageMaker Model Monitor – Spots drift in models running in production.

- Fiddler AI – Provides explainability and keeps an eye on model health.

These tools can cut down on the time you’d spend checking things by hand.